7 Startups Giving Artificial Intelligence (AI) Emotions

Table of contents

We’ve written a lot about artificial intelligence (AI) here at Nanalyze, and just when we feel like there’s not much more we can add to the topic, we find loads more interesting companies to write about. There has been a lot of talk lately about how machines just won’t be able to capture that “human element” of emotions or “emotional intelligence” as it is often called. The act of building an emotional quotient or EQ as a layer on top of AI is being referred to as affective computing, a topic we covered before. The first step towards AI being able to demonstrate emotional intelligence, is that it needs to see emotions in our behavior, hear our voices, and feel our anxieties. To do this, AI must be able to extract emotional cues or data from us through conventional means like eye tracking, galvanic skin response, voice and written word analysis, brain activity via EEG, facial mapping, and even gait analysis.

Here are 7 startups attempting to seamlessly integrate AI, machine learning, and emotional intelligence.

Beyond Verbal

In addition to marketing, there are also possible healthcare applications for this technology. The Mayo Clinic is using Beyond Verbal to identify vocal biomarkers that can help identify Coronary Artery Disease (CAD), Autism Spectrum Disorder (ASD), and Parkinson’s Disease (PD) with the belief that this technology can help provide an early diagnosis. With 2.3 million recorded voices across 170 countries and 21 years of research, they might just be in a position to make the FDA pay attention.

If you want to try the technology on yourself, they have a free app called Moodies that you can speak into and it will tell you what emotions you’re exhibiting. Any newly minted MBAs reading this should use that technology to build a breathalyzer that you can speak into on your smartphone, then send it to us so we can use it on our writers when they come into work in the morning.

nViso

Founded in 2005, nViso is a privately-held Swiss startup with a $750K grant which was originally meant to develop an automatic prediction process for accurately categorizing patients needing tracheal intubation (plastic tube down the throat) for surgeries involving general anesthesia, a very costly procedure. Like CrowdEmotion, nViso’s technology tracks the movement of 43 facial muscles using a simple webcam and then uses AI to interpret your emotions.

The Bank of New Zealand tried nViso’s technology on about 200,000 people (about 6% of all adults in New Zealand) and found that they rose from a #4 position to the #1 most preferred bank consumers would move to. BNZ also moved from #5 to #2 national rankings in terms of being a good place to manage clients’ money when it deployed nViso’s EmotionAdvisor. It’s kind of hard to see how that would even be possible but here’s a case study with all the details.

Receptiviti

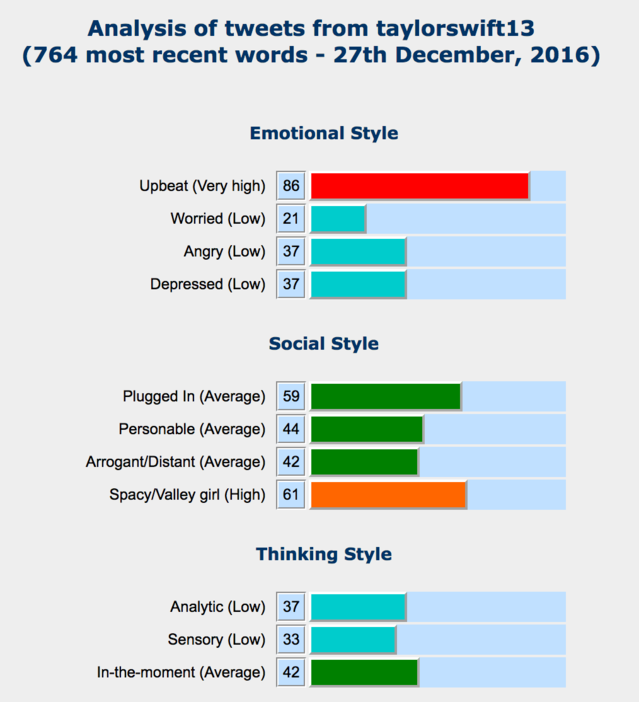

While the tone of your voice may disclose how you’re feeling, did you know that what you write can as well? Founded in 2014, Toronto-based startup, Receptiviti, has taken in $690K in total funding to develop a proprietary technology they called Linguistic Inquiry and Word Count or LIWC2015 which looks at what you write and then uses it to gather insights about your character, emotions, level of deception, or even how you make decisions. The Company uses a branch of artificial intelligence called Natural Language Processing (NLP) techniques to capture people’s emotions, social concerns, thinking styles, psychology, and even their use of parts of speech. Here’s an analysis they did on some of the literary genius produced by Taylor Swift on Twitter:

While one obvious use might be to make chatbots actually tolerable, the technology can be used as a foundation for doing some serious armchair psychology on customers, employees, job applicants or even the president. That’s right, as it turns out President Trump’s tweets are likely coming from two different sources. We decided to ask Receptiviti what they thought about our commentary on them and here are the three attributes they came back with:

We didn’t see ridiculously good looking in there but aside from that, pretty much spot on. You can test Receptiviti yourself and see what it has to say about what you write.

BRAIQ

Car manufacturers know 75% of Americans are actually “afraid’ of self-driving cars and don’t trust them. BRAIQ hopes to earn this trust by allowing their technology to intuitively read emotional signals hoping you enjoy the ride more than worrying about getting there. BRAIQ sees $4 billion of funding commitments on autonomous driving research over the next 10 years that they can tap into. With an estimated 20 million fully autonomous vehicles hitting roads by 2025, they hope their technology will be ubiquitous and enabled by hidden sensors so you’ll never even know it’s there.

NuraLogix

Founded in 2015, Toronto startup NuraLogix started with a $115K Idea to Innovation (I2I) grant to develop a technology that can read human emotions and can even tell if you’re lying. The startup developed a technique to “read” human emotional state called Transdermal Optical Imaging™ (TOI™) using a conventional video camera to extract information from the blood flow underneath the human face. Contrary to what Pinocchio was told, it is not the growing nose itself that can determine if you’re actually lying but the blood flow around the area of the nose. The technology has applications in the field of marketing, research, and health with the software expected to be deployed into the Cloud this year. A mobile phone version is still years down the road so you can still safely answer the “honey do I look fat in these” questions without any backlash.

Emoshape

The chip will use algorithms to identify any combination of the twelve primary emotions, allowing an intelligent device to manifest potentially 64 trillion distinct emotional states. The EPU is available for purchase so go buy one and see what it can do yourself.

CrowdEmotion

Founded in 2013, CrowdEmotion is a privately-held London-based startup with a technology to gauge human emotions visually by mapping the movements of 43 muscles on the human face. BBC StoryWorks used this technology in a 2016 study called ‘The Science of Engagement’ where they measured the second-by-second facial movements of 5,153 people while viewing English language international news in six of BBC’s key markets. CrowdEmotion currently offers the technology over the cloud and has a fair number of clients already including, of course, the BBC. You can upload a video to their website and then it will give you some running commentary on the sort of emotions you’re exhibiting.

Conclusion

Our initial thoughts were that because you know you are being observed, it will make you behave differently, so this technology will probably be most effective when applied undetected. That’s not exactly true though. According to a subject matter expert we spoke to, “micro facial expressions are really difficult to hide consciously. We actually only see impact on the first 1-2 seconds if at all. This has been consistent across billions of facial data points“. The very discussion though of emotion analysis that is hidden raises privacy concerns.

We discussed this with Matthew Celuszak, CEO of CrowdEmotion, and his comments were that “in most countries, emotions are or will be treated as personal data and it is illegal to capture these without notification and/or consent. How emotion companies handle CCTV data is a bit of a grey area in how notification is done, but turning on a camera or microphone without consent is a sure fire way to kill your company“. This is certainly something to be aware of for companies like BRAIQ that want to observe passenger emotions undetected. The next time you communicate with a smart device, think about that fact that you may be talking to something that reads emotions better than you do.

Sign up to our newsletter to get more of our great research delivered straight to your inbox!

Nanalyze Weekly includes useful insights written by our team of underpaid MBAs, research on new disruptive technology stocks flying under the radar, and summaries of our recent research. Always 100% free.